Ongoing Research Program Case Study:

How Banner Health Reached Consensus on Design Decisions with Recurring Research

A team at this 50,000-employee organization was struggling to align on design directions and digital priorities. A program of recurring, collaborative research created a shared understanding of users and their pain points — and helped the team get on the same page.

Challenge: Differing Opinions on How to Improve Designs

80% of Alzheimer’s research studies are delayed because too few people sign up to participate. Banner Health has been on a mission to change this equation.

With over 50,000 employees, 30 hospitals, and 1 million customers, Banner is one of the largest hospital systems in the U.S. In 2006, the Phoenix-based nonprofit created the Banner Alzheimer’s Institute. The Institute’s 3 comprehensive memory care centers have revolutionized the standard of care for Alzheimer’s patients. The Institute has also been a leader in scientific collaborations and clinical trials.

In 2016, Leslie Stahl featured the Institute’s innovative work in a 60 Minutes story, “The Alzheimer’s Laboratory”.

* * *

One of the Institute’s top initiatives is Alzheimer’s prevention research. Recruiting for prevention studies is particularly challenging because you need participants with certain types of genes.

To combat this challenge, the team launched the Alzheimer’s Prevention Registry and signed up over 350,000 members. They developed partnerships with top Alzheimer’s research organizations. And they built a Find a Study portal to connect Registry members with Alzheimer’s research studies across the U.S. and online.

The Banner team wanted to see more members engaging with this portal and connecting with study sites. But, along with their design and development agencies, they were struggling to agree on the parts of Find a Study that required improvement and on how to improve them. Fortunately, they had a tool to guide them toward consensus.

Approach: Recurring Collaborative User Research

Marketade worked with Banner and its design agency, Provoc, to create and implement a program of ongoing user research. The multi-year program was built around 2 premises:

- A deep understanding of users and their problems — gained through interviews and observation — is the best foundation for improving the customer experience.

- All stakeholders on the team (including designers, developers, product owners, and management) must observe the research and participate in the process of identifying findings and opportunities.

The centerpiece of the program was what we called the “participatory research session”: a 2-hour collaborative workshop where stakeholders reviewed research and then went through a process to reach consensus on problems and opportunities.

This approach lies in sharp contrast to the “report-driven approach” used for most UX research — where the researcher conducts and analyzes the research sessions and then presents findings and solutions to the team in a deck, perhaps with some quotes from users or video clips.

The report-driven approach is better than nothing and can produce big gains if no research has been done in the past. But over and over again, we’ve seen the team-based approach drive the biggest ROI, especially when built into a recurring process. That’s because seeing is believing. There’s nothing like directly watching users interact with products, and struggling to achieve their goals, especially after you’ve heard what those goals are in their own words, and learned why this person is such a great fit for your product. Reading about their struggles and seeing highlights doesn’t compare to extended observation of their full experience.

Studies lasted between 1 and 3 months. Here’s how the process worked for each study:

- We met with stakeholders to review upcoming design projects and decide on a topic for the study.

- We teamed up with Banner to recruit representative participants for the study. The Registry has an engaged email list and monthly newsletter. We took advantage of that, along with their social media channels, to build a user research panel of about 125+ potential participants. For the typical study, we recruited 5-8 participants that met our criteria.

- We scheduled and conducted 1:1 research sessions with participants. Most often these were a combination of open-ended user interviews and usability testing or concept testing.

- We condensed the full research video footage – typically 2 to 3 hours worth – to 1 hour.

- We ran the collaborative session with the extended team. We spent the 1st hour watching the research. In the 2nd hour, we ran an affinity mapping session to reach consensus on findings and to identify initial solution directions.

Get Tips on Recurring UX Research

Want to build a scalable program of continuous user research? Sign up to receive a new case study each month featuring our best work and ideas in ongoing research.

For affinity mapping, we used a modified version of Jared Spool’s KJ Method. Since the team included people in Arizona, Oregon, DC, and elsewhere, our approach was remote and used collaborative whiteboard software.

Before showing the research, we gave team members a focus question — such as “What are the biggest barriers to finding and participating in Alzheimer’s research studies?” — along with instructions on things to look for and the types of findings to capture.

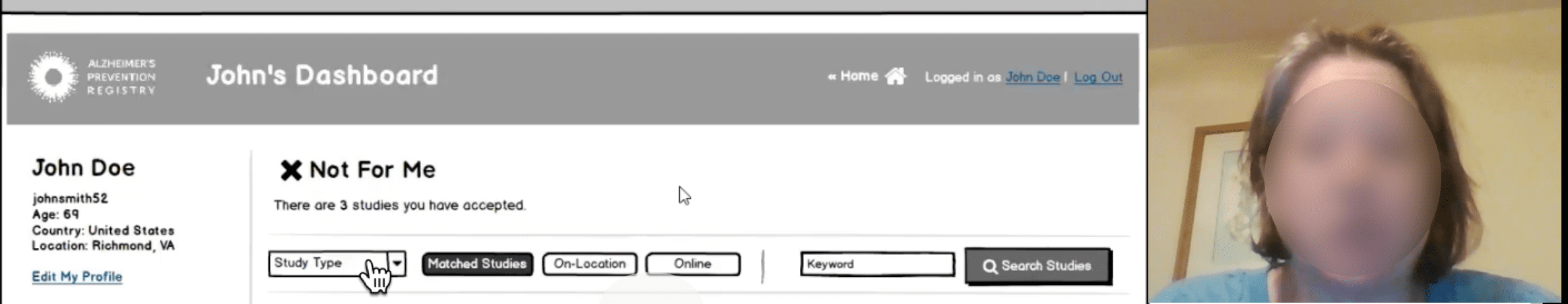

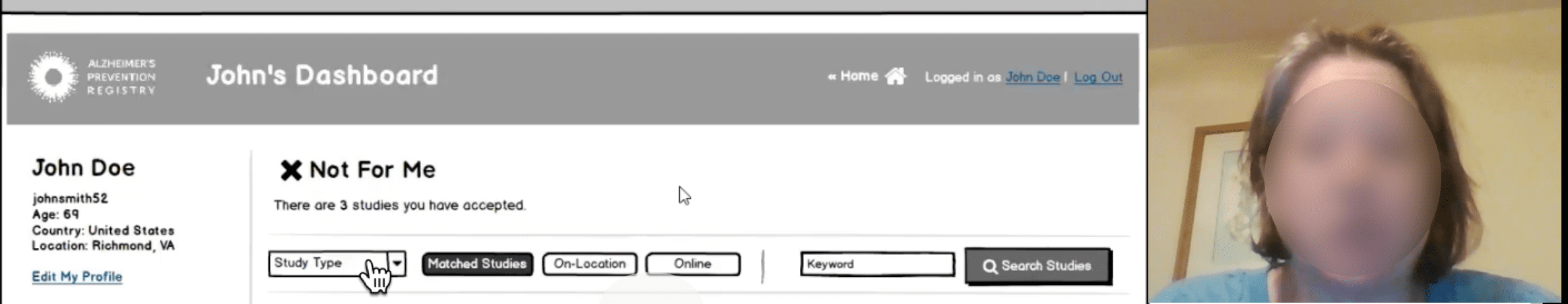

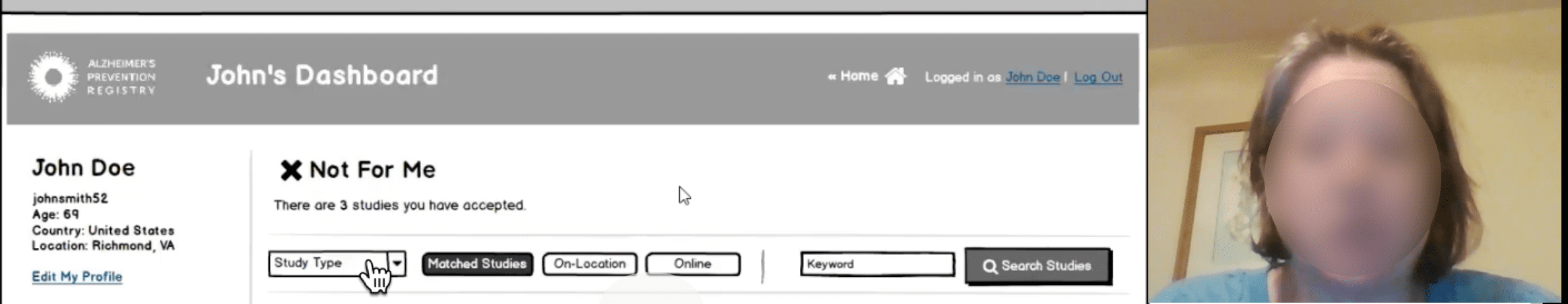

A user research participant thinks aloud while interacting with a prototype of a registry portal.

In tightly timed segments over the next 30 minutes or so, team members 1) posted their findings individually, then 2) grouped them, then 3) labeled the groups, and then 4) voted on top findings. Nearly all of this was done in silence to ensure all voices are heard, avoid group think and political debates, and keep things moving quickly.

By the end of this phase, we typically saw 3 barriers or findings emerge as the clear favorites. We captured all of the other findings and returned to many of them in the future. But unlike typical meetings, we didn’t waste precious time debating peripheral problems or pet projects in this session. The process kept us focused and marching toward consensus on the biggest issues.

With the top 3 barriers confirmed, we asked people to brainstorm solution ideas individually for a few minutes, and then posted their favorite ideas on the board, under the appropriate barrier. If we had time, we did some quick voting on these ideas. Then we briefly summarized what we’ve accomplished, touched on next steps, and called it a day.

Outcome: Team Alignment on Key Decisions & Directions

This recurring research program allowed the team to reach a consensus on strategy and design for a variety of initiatives and concepts. These included a dashboard for study site researchers to manage their leads and participants and a dashboard for Registry members to track potential studies.

The Find a Study section was relaunched with a new design focused on solving the 3 barriers identified in a collaborative session. Prior to that research session, it felt like the list of issues to tackle with Find a Study were endless. Watching users go through the search experience allowed the group to quickly get on the same page regarding the biggest problems worth solving.

Get Tips on Recurring UX Research

Want to build a scalable program of continuous user research? Sign up to receive a new case study each month featuring our best work and ideas in ongoing research.

More Research Program Case Studies

How a Top-5 Ad Agency Brought UX Research to Top Clients

Epsilon works with some of the largest banks and pharma companies in the world. Here are highlights from some of the projects we’ve teamed up on.

Keys to a Multi-Year UX Research & Strategy Partnership

An industrial machinery leader has partnered with Marketade for 9 years. Here are some of the factors that have made this engagement a success.

More Healthcare Case Studies

Helping Mount Sinai Improve Cancer Patient Health Through Usability Testing

With a launch date looming, a team uses rapid UX testing to improve a web-based health app for oral cancer survivors and caregivers.

How a Website Survey Gave UVA Health Actionable UX Insights

How do you launch a website survey that captures actionable data without annoying users? Here’s the step-by-step process we used for a regional health system along with the keys to our survey’s success.